TTFB (Time to First Byte) is your server’s “first response” latency—how fast the origin (or edge) starts delivering HTML to users and Googlebot.

In 2026, TTFB matters for SEO because it affects crawl efficiency, indexing velocity, and how stable your Core Web Vitals performance remains under real-world load (not just in lab tests).

When TTFB is an SEO issue (and when it’s not)

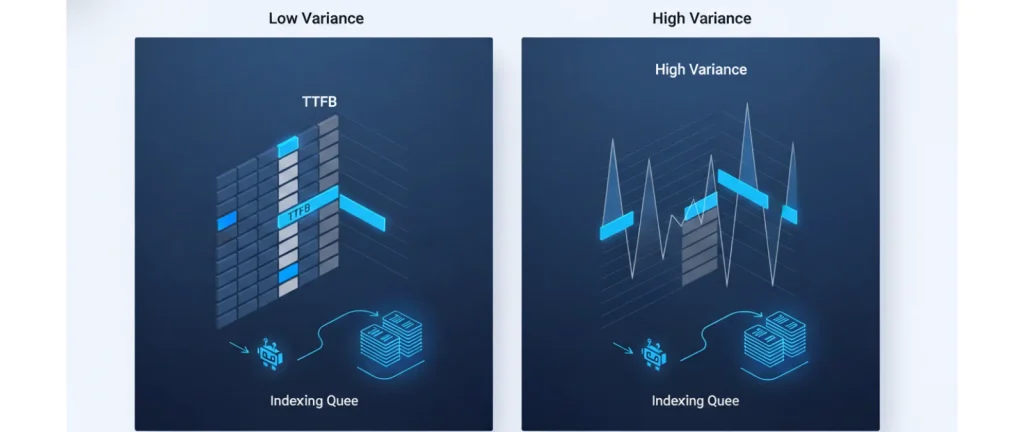

TTFB becomes an SEO blocker when it is high and variable, because Googlebot experiences the same infrastructure instability as users: timeouts, slow fetches, fewer URLs crawled per session, and slower reprocessing of important pages.

If your pages are fast “sometimes” but slow during peaks or for certain regions, your SEO performance will feel unpredictable even if your median looks okay.

TTFB targets (practical thresholds)

Use TTFB thresholds as triage, not as a vanity benchmark: your goal is to reduce variance first, then reduce the median.

A common operational target is to keep TTFB under ~800 ms (ideally ~400 ms) for key templates, then focus on consistency across regions and peak traffic windows.

30–60 minute TTFB diagnosis (step-by-step)

- Confirm it’s server-side: Compare field behavior (real user regions, peak hours) vs lab tests; if lab is fine but field is bad, you likely have network/routing, edge, or load-related issues.

- Segment by template: Homepage, category/listing, article, product/service page; TTFB issues are often isolated to DB-heavy templates.

- Check crawl/index signals: If Googlebot slows down, you’ll often see delayed indexing and reduced crawl depth when origin responses become unstable.

- Find variance sources: Look for spikes linked to cron jobs, cache misses, traffic bursts, database locks, or slow third-party calls on the server.

Root causes by layer (and the fastest fixes)

- Origin server overload: Add caching at the right layer (full-page where safe; object caching for dynamic views), reduce expensive server-side work per request.

- Database bottlenecks: Fix missing indexes, reduce query count, eliminate N+1 patterns, cache expensive queries, and isolate slow endpoints.

- Network/geo latency: Put a CDN/edge layer in front of the origin to stabilize delivery and reduce regional RTT, especially for international audiences.

- Rendering strategy mismatch: For SEO-critical routes, ensure HTML is reliably available (SSR/SSG where appropriate) so crawlers aren’t delayed by rendering queues.

A decision tree you can actually use (prioritization)

Start with variance: if you have spikes, treat them before chasing “best-in-class” medians.

Then prioritize by business impact: fix TTFB first on templates that drive acquisition (high-traffic / high-intent landing pages) and on templates that Googlebot hits most frequently (hubs, listings).

Only after stability is achieved should you invest in deeper architecture changes (rendering strategy changes, major DB refactors).