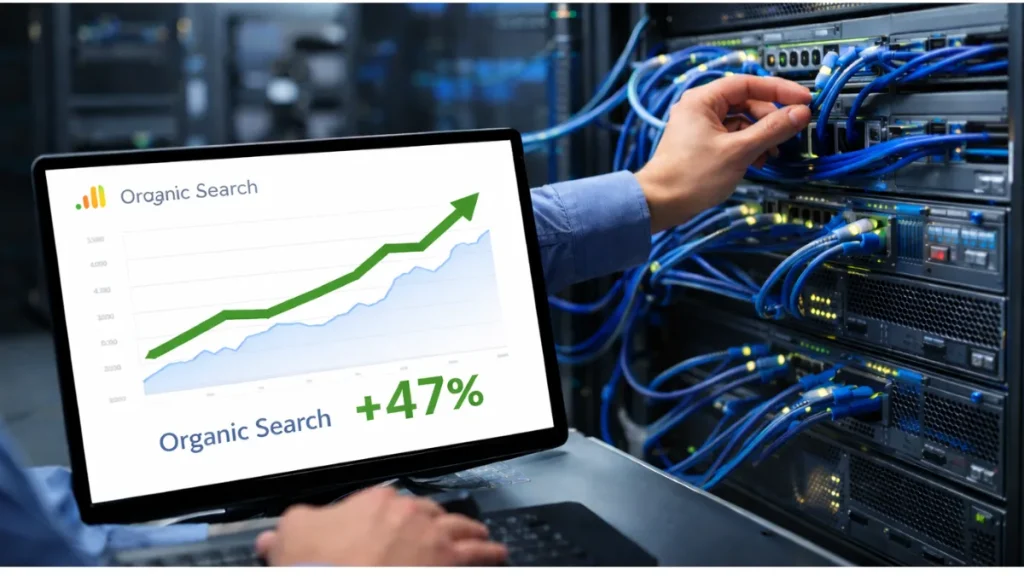

This case study documents how a B2B site reduced Time to First Byte (TTFB) by 2.3 seconds and turned that technical improvement into measurable SEO growth: +47% organic traffic and faster indexing for new pages. The key lesson for 2026 is that “speed work” only pays when it removes systemic bottlenecks that affect crawl efficiency, Core Web Vitals stability, and rendering consistency across regions—not when it’s limited to cosmetic front-end tweaks.

Baseline problem (before)

The site had strong content and internal linking, but performance was unstable under load. Googlebot crawls frequently triggered spikes: slow responses, occasional 5xx errors, and long delays before newly published pages became indexed. In practice, this meant competitors with similar content were getting discovered and ranked earlier.

Primary diagnosis

Three issues stacked together:

- Slow backend response time caused by database-heavy templates and cache misses.

- Unoptimized server configuration (keep-alive, compression, and redirect chains).

- No edge layer, so international requests paid a high “distance tax.”

These bottlenecks directly inflated TTFB and reduced crawl efficiency.

What we changed (in order) to reduce TTFB by 2.3s

The fix was executed as a controlled sequence, because performance projects fail when multiple variables change at once.

1) Database bottleneck removal (week 1)

The biggest TTFB spikes came from database-heavy templates (category pages and “related resources” blocks).

Actions:

- Added indexes for the most common filters/sorts.

- Reduced query count by batching “related” content (removing N+1 patterns).

- Implemented object caching (Redis) for menus, taxonomies, and repeated blocks.

Result: TTFB dropped sharply during crawl bursts because requests stopped recomputing the same data repeatedly [[How database optimization prevents SEO performance bottlenecks]].

2) Server configuration hardening (week 2)

Next, the server was tuned to improve crawl reliability.

Actions:

- Keep-alive enabled and tuned to reuse connections.

- Brotli enabled for text assets; cache headers normalized.

- Redirect chains reduced to a single 301 hop.

Result: fewer timeouts/5xx during peak loads and improved crawl efficiency [[How to optimize server configuration for faster crawling and indexing]].

3) CDN + edge layer rollout (week 3)

A CDN was placed in front of the origin.

Actions:

- Static assets cached aggressively; HTML cached selectively (marketing + content pages).

- Edge rules enforced one canonical host and consistent trailing slash behavior.

Result: reduced “distance tax” for international requests and stabilized performance across regions [[CDN and edge computing: How distributed infrastructure boosts SEO performance]].

At this stage, the median TTFB moved from ~2.6s to ~0.3s (‑2.3s), and variance collapsed—exactly what Googlebot rewards with deeper, faster crawling [[What is server response time (TTFB) and why it matters for SEO rankings?]].

Results, measurement, and why the SEO lift was real

Measurement setup (so results are attributable)

The team tracked performance and SEO in parallel:

- TTFB (lab + field): server logs + real-user monitoring snapshots, segmented by country/device.

- Crawl efficiency: Crawl Stats (crawl requests/day, average response time, 5xx rate) and log files (Googlebot hit rate on key templates).

- Indexing speed: URL Inspection checks on newly published pages (time from publish → indexed).

- Organic growth: Search Console clicks/impressions on the same page groups (no new content published during the 3-week remediation window to avoid mixing variables).

Observed outcomes (8 weeks post-change)

- Median TTFB improved by 2.3s (≈ 2.6s → 0.3s).

- Crawl volatility dropped: fewer timeouts and fewer 5xx during crawl bursts.

- Indexing speed improved: new pages were discovered and indexed noticeably faster after publication.

- Organic traffic increased +47%, driven mainly by faster discovery of deep pages and better stability of Core Web Vitals on international traffic.

Why this worked (transferable lesson)

The biggest win was not “making pages faster.” It was removing systemic friction that forced Googlebot to waste resources per URL.

By fixing database hotspots, hardening server behavior, and adding a CDN/edge reliability layer, the site became cheaper to crawl and more stable to index—so Google allocated more crawling and refreshed rankings more confidently.